Method

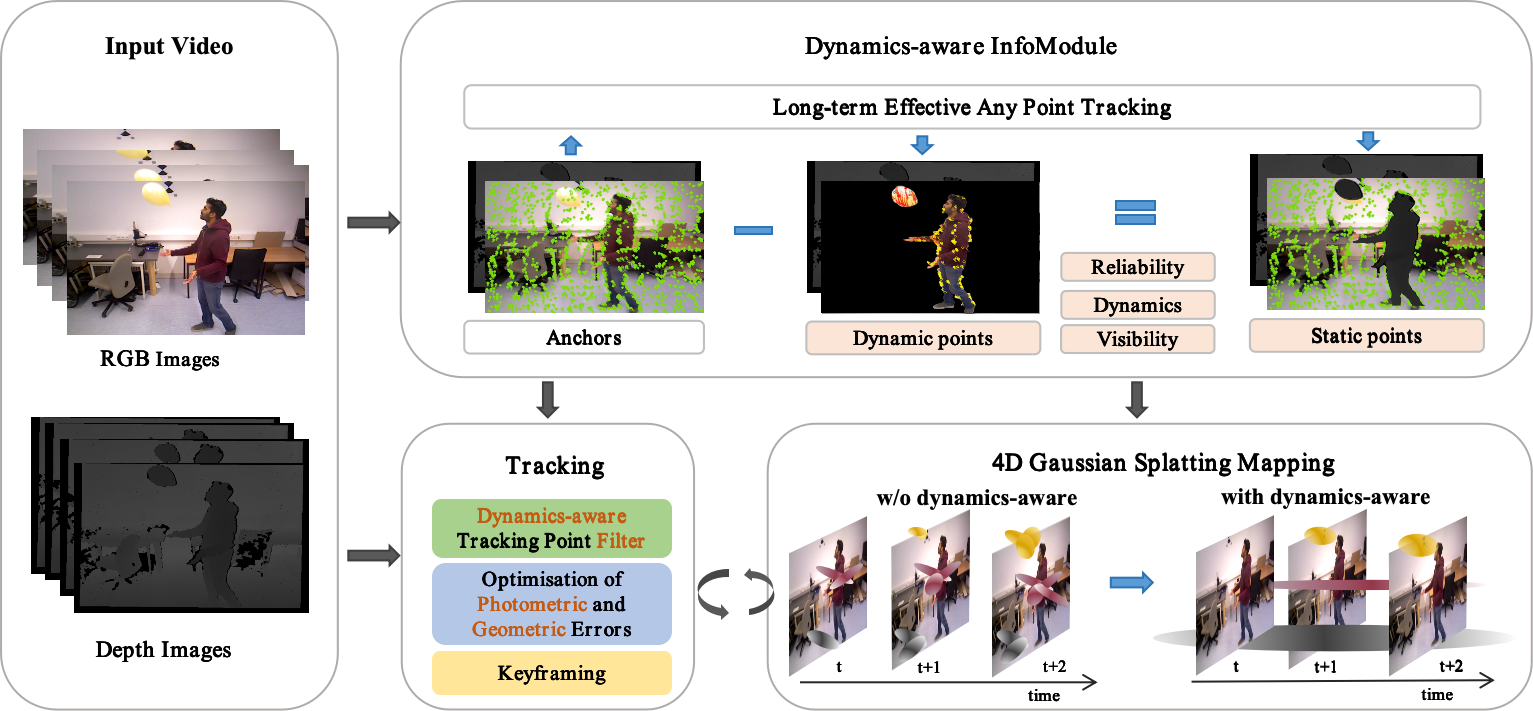

D4DGS-SLAM uses an RGB-D image sequence as input. We first extract anchors from each incoming RGB frame that are well-distributed globally and reflect the image features. These anchors, along with the RGB images, are fed into the LEAP module to obtain the dynamics and reliability of the anchors. This allows us to distinguish between stable dynamic points and static points. The static anchors are used for tracking to estimate the camera pose. These poses and dynamic information are then sent to the mapping module. We use 4DGS for mapping and select different scale penalty factors based on the dynamics and reliability of the covered points to control the distribution of the Gaussian in space- time. The techniques used and our SLAM system will be introduced below.

Results

Mapping

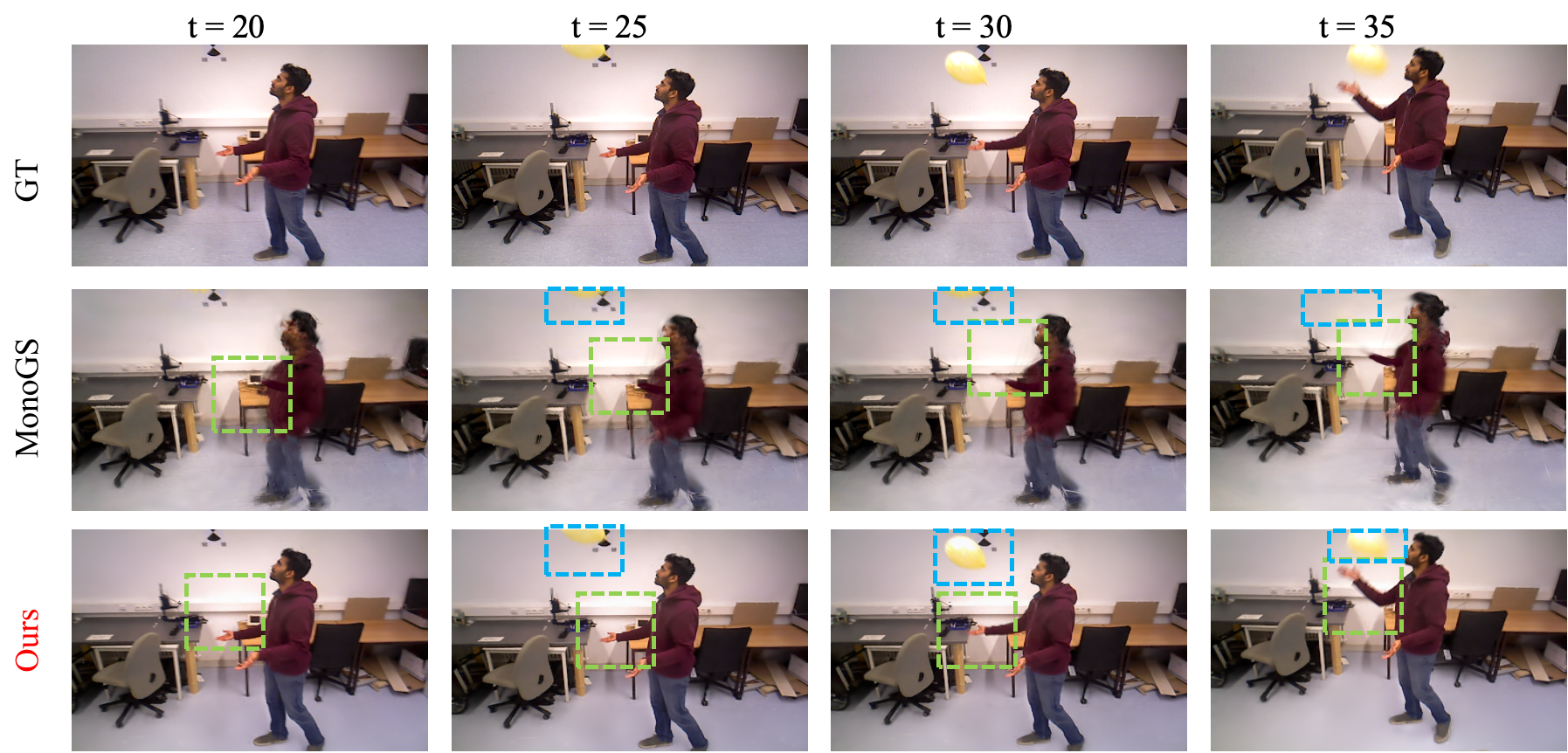

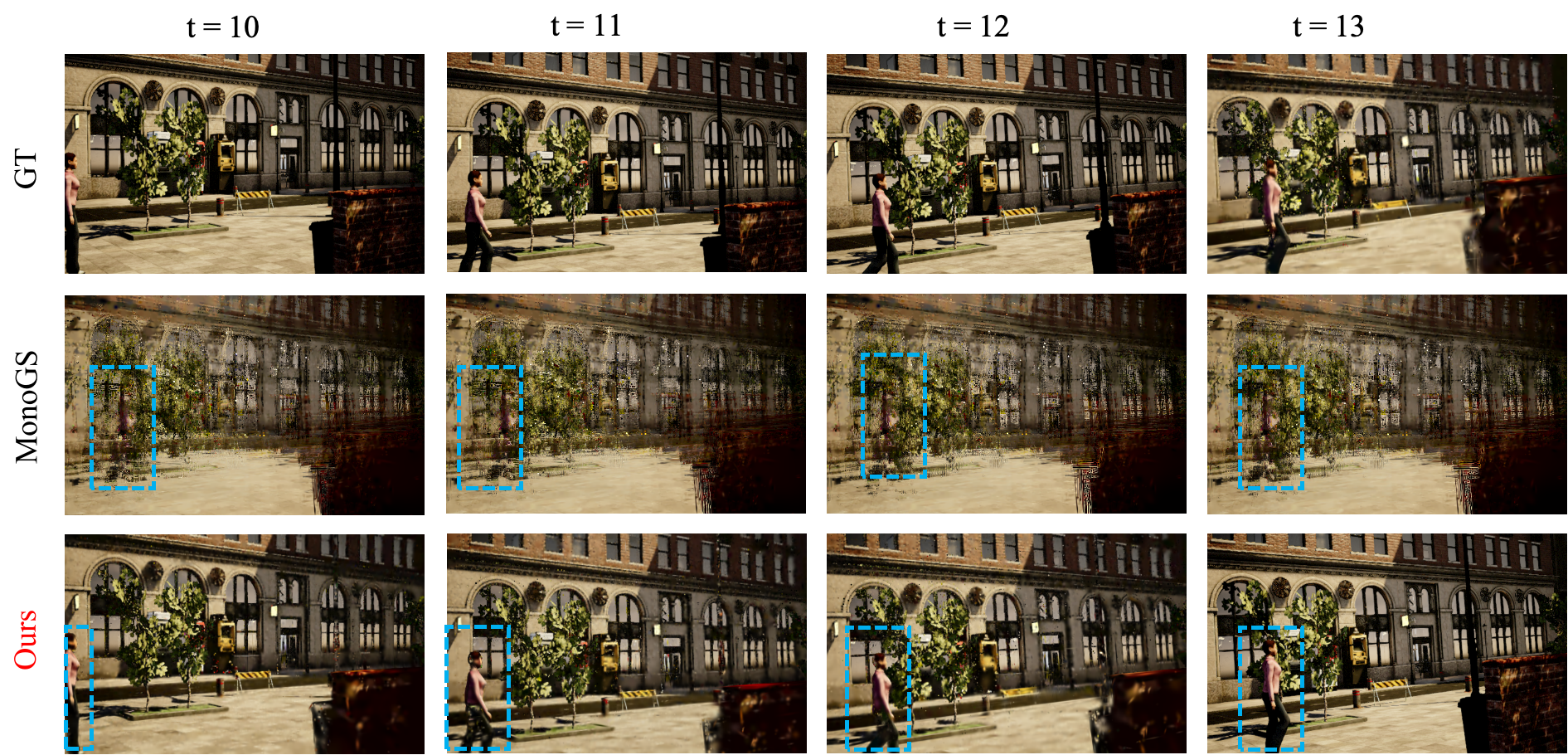

Visual comparison of the rendering image on the TartanAir-Shibuya datasets

Tracking

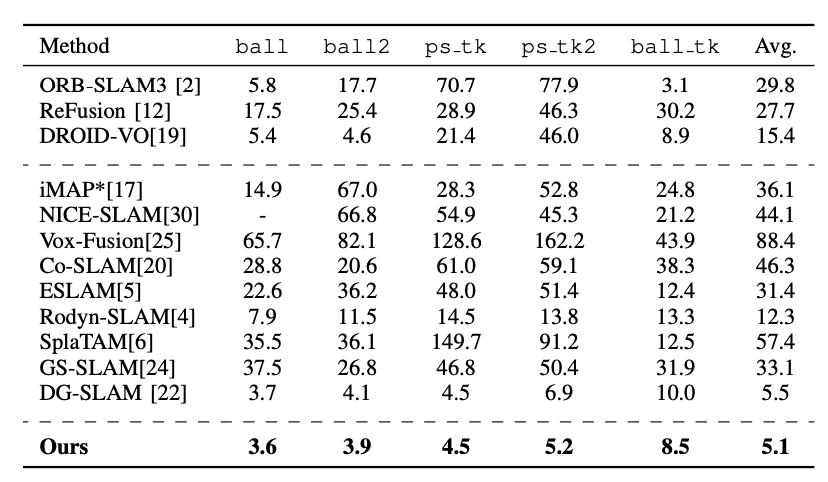

Results of metric ATE RMSE on several dynamic scene sequences in bonn dataset. "∗" denotes the version reproduced by NICE-SLAM. "-" denotes the tracking failures. The metric unit is [cm].

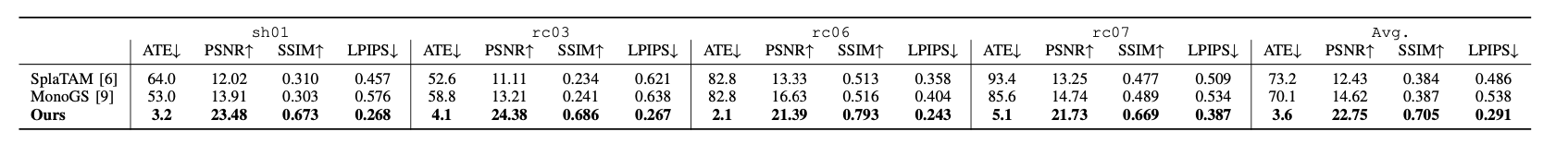

Camera tracking and mapping quality on several dynamic sequences in the Tartanair-Shibuya dataset.

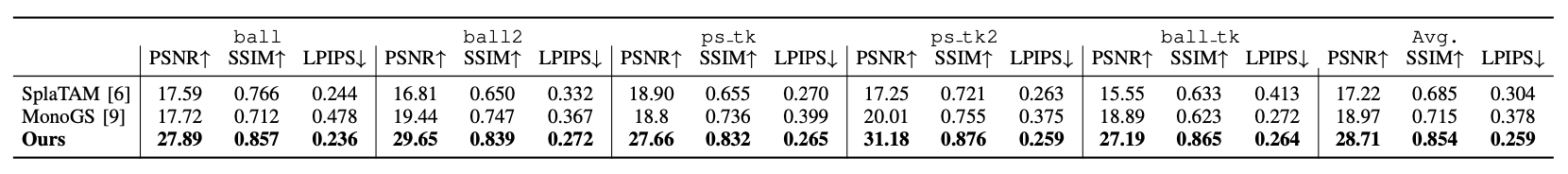

Map quality on several dynamic sequences in the BONN dataset.

BibTeX

@inproceedings{sun2025d4dgsslam,

title={Embracing Dynamics: Dynamics-aware 4D Gaussian Splatting SLAM},

author={Zhicong Sun, Jacqueline Lo, Jinxing Hu},

journal={arXiv preprint arXiv:2504.04844},

year={2025}

}Acknowledgements

We acknowledge the funding from the Research Grants Council of Hong Kong, the Hong Kong Polytechnic University, and the Shenzhen Institutes of Advanced Technology of the Chinese Academy of Sciences. Additionally, this work refer to many open-source codes, and we also thank the authors of the relaed work: 3D Gaussian Splatting, Differential Gaussian Rasterization, 4D Gaussian Splatting, Gaussian Splatting SLAM, LEAP-VO, and SplaTAM.